Jake Xavier Checketts, Cole Wayant, Nate Nelson, Matt Vassar, PhD

Oklahoma State University - Center for Health Sciences

The aim of this study was to evaluate the current state of two publication practices in cardiothoracic journals: reporting guidelines and clinical trial registration.

We extracted data from the web-based instructions for authors of the top twenty cardiothoracic surgery journals as defined by the Google Scholar Metrics h-5 index. Our primary analysis was to determine the level of adherence to reporting guidelines and trial registration policies by each journal.

Of the twenty cardiothoracic surgery journals, ten (10/20, 50%) did not mention a single guideline within their instructions for authors, while the remaining ten (10/20, 50%) mentioned one or more guidelines. ICMJE guidelines (15/20, 75%) and the CONSORT statement (10/19, 52.6%) were mentioned most often. Of the twenty cardiothoracic surgery journals, nine (9/20, 45%) did not mention trial or review registration, while the remaining eleven (11/20, 55%) mentioned at least one of the two.

Our investigation of the adherence to reporting guidelines and trial registration policies in cardiothoracic journals demonstrates a need for improvement. Reporting guidelines have been shown to improve methodological and reporting quality, thereby preventing bias from entering the literature. We recommend the adoption of reporting guidelines and trial registration policies by all cardiothoracic journals.

Cardiothoracic surgeons rely on results from well-designed, well-executed studies to provide informed patient care. Efforts should be made to ensure studies are conducted thoughtfully and published reports contain the necessary information to draw informed conclusions from the results. In 2006, Tiruvoipati et al. evaluated the reporting quality of randomized trials in cardiothoracic surgery journals, concluding that trials were suboptimally reported compared with the requirements of the Consolidated Standards of Reporting Trials (CONSORT) Statement.1 Results from their survey of cardiothoracic trialists indicated that over 40% would likely have reported the trial differently had the journal required adherence to CONSORT. Over 50% of these trialists believed the reporting quality of trials would improve if cardiothoracic journals required CONSORT adherence. Since publication of this study, efforts have been made to improve the quality of reporting for many study designs. For example, the Enhancing the Quality of Transparency of Health Research (EQUATOR) Network was established to advance high-quality reporting for health research. To date, their collection of reporting guidelines for various study designs exceeds 300, including Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) for systematic reviews, Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) for observational studies, and Standards for Reporting Diagnostic Accuracy Studies (STARD) for diagnostic accuracy studies.2 Evidence from the International Journal of Surgery suggests that reporting quality improved following a change in the journal’s policy to support adherence to reporting guidelines.3

A second mechanism to improve study design and execution is the registration of studies in a public repository prior to conducting them.4,5 In 2005, the International Committee of Medical Journal Editors (ICMJE) established a policy that participating journals require trial registration as a precondition for publication.6 Two years later, the United States Congress passed the Food and Drug Administration Amendments Act, which mandated the prospective registration of applicable clinical trials prior to patient enrollment.7 Position statements from the World Health Organization and the United Nations Secretary-General’s High Level Panel on Access to Medicines advocate for governments around the world to enact legislation requiring clinical trial registration, including study protocols and trial data, regardless of the findings.6,8 Prospective registration is not limited to clinical trials. Following development of the PRISMA guidelines for systematic reviews, the Centre for Reviews and Dissemination established PROSPERO, a prospective register for systematic reviews.9 Similar to clinical trials, prospective registration of systematic reviews is designed to provide greater transparency and accountability, minimizing bias.9

Evidence indicates that many cardiothoracic trials are not correctly registered. Wiebe et al. found that a majority of trials were either registered during patient enrollment or after trial completion.10 This study also found that cardiothoracic trials were prone to selective reporting bias, a practice in which outcomes listed during registration are altered in the published report, often by amending outcomes to favor statistical significance.10,11 In this study, we investigated the policies of twenty cardiothoracic surgery journals regarding adherence to reporting guidelines and study registration with the intent of understanding if journals are using these mechanisms to minimize bias.

Of the twenty cardiothoracic surgery journals, the instructions for authors of five (25%) journals referenced the EQUATOR Network, and fifteen (75%) journals referenced the ICMJE guidelines; ten (50%) did not mention any guideline, while the remaining ten (50%) mentioned one or more guidelines.

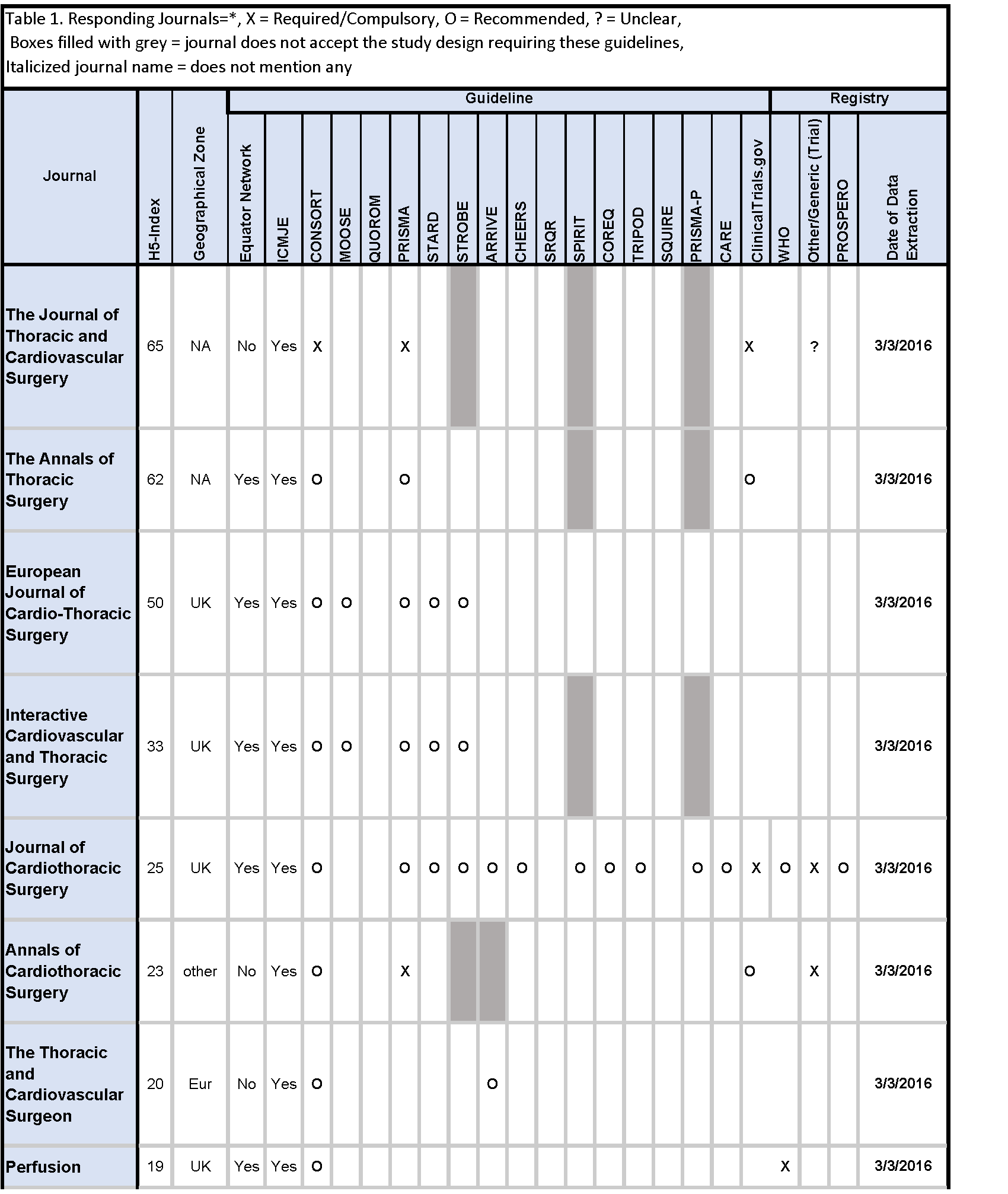

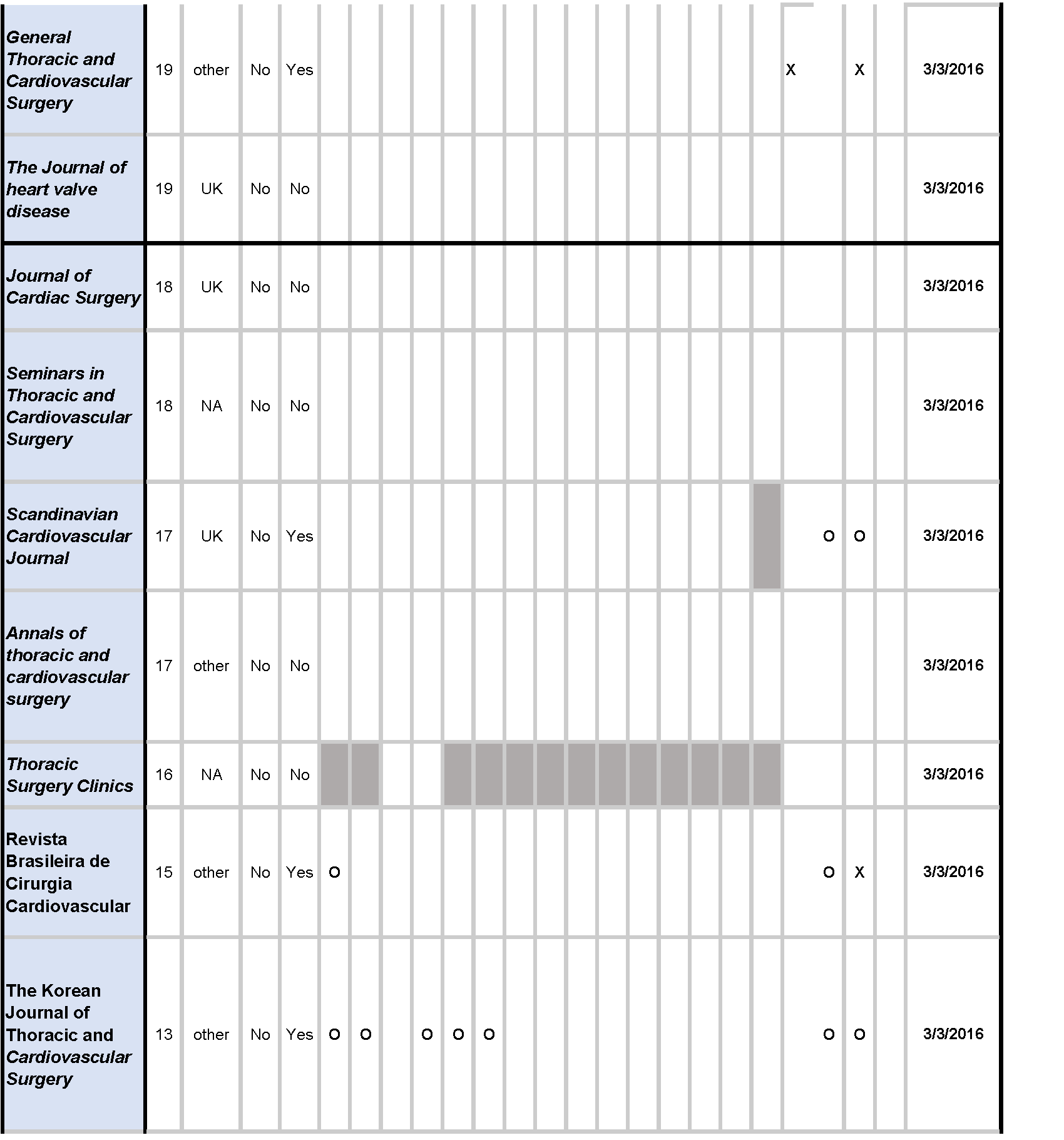

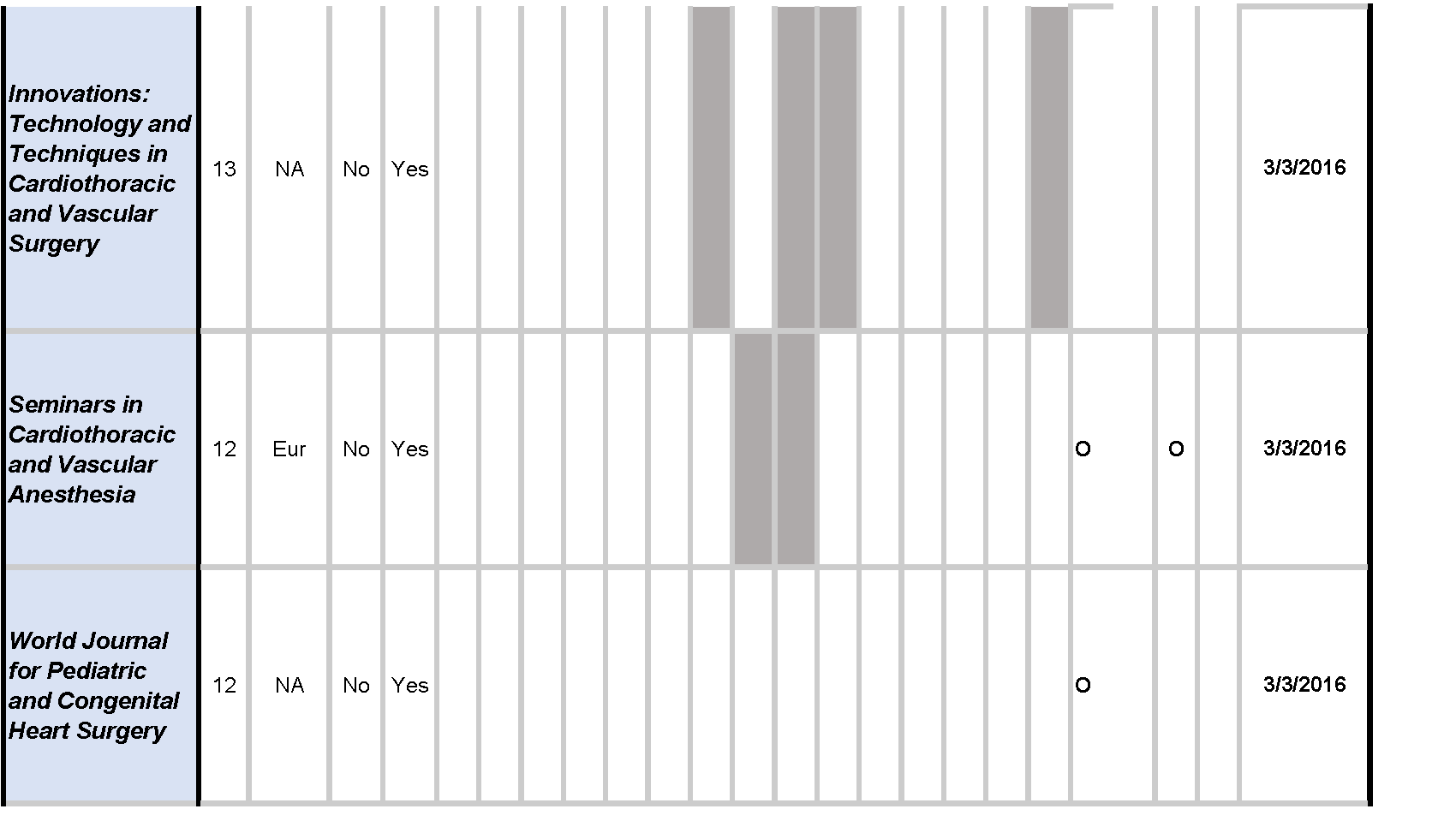

Across reporting guidelines, the CONSORT statement (10/19, 52.6%) was most frequently required (1/10, 10%) and recommended (9/10, 90%) by journals. The PRISMA guidelines (7/20, 35%) were the second most frequently required (2/7, 28.6%) and recommended (5/7, 71.4%), followed by the STARD (4/19, 21.1%), and STROBE (4/19, 21.1%) guidelines. The QUOROM statement, SQUIRE, and SRQR were not mentioned by any journals [Table 1].

Of the twenty cardiothoracic surgery journals, nine (45%) did not mention trial or review registration, while the remaining eleven (55%) mentioned at least one of the two. Trial registration through ClinicalTrials.gov was mentioned by seven (7/20, 35%) journals, required by three (3/7, 42.9%), and recommended by four (4/7, 57.1%) journals. Registration through World Health Organization was mentioned by five (5/20, 25%) journals, required by one (1/5, 20.0%), and recommended by three (4/5, 80.0%) journals. Eight (8/20, 40%) journals required trial registration through any trial registry. Review registration through the PROSPERO platform as well as any review registry platform were each recommended by one (1/20, 5.0%) journal [Table 1].

This study evaluated the current positions of cardiothoracic surgery journals on reporting guideline and study registration requirements as described in their instructions for authors. Our results suggest that half of the journals did not mention reporting guidelines. Moher et al. state, “The widespread poor reporting of medical research represents a system failure … there is clearly a collective failure across many key groups to appreciate the importance of adequate reporting of research.”15 Inadequate study reporting inhibits readers from accurately interpreting results or leads to inaccurate interpretation. Some have suggested that this borders on unethical practice when biased results receive false credibility.16 Zonta and De Martino argue that since surgical trialists dislike randomization because of the uncertainty it creates, “It seems doubly important that surgical trials should be scrupulously reported to allow interpretation of any potential bias.”17 Since multiple studies have concluded that the use of reporting guidelines has beneficial effects, editors of cardiothoracic surgery journals would be well-advised to consider endorsing them.2,18,19 However, endorsement does not always translate to adherence.2 To improve research reporting, all stakeholders in the research process must assume responsibility. While journals play an important role, academic and other research organizations, funders, regulatory bodies, and authors must become more proactive to ensure that research is accurately, completely, and transparently reported.2

Research from 2006 found that the reporting quality of randomized controlled trials in cardiothoracic surgery was suboptimal: allocation concealment was not described in 86% of trials, the process to generate a random allocation sequence was not described in 78%, and blinding of outcome assessors was not described or inadequately described in 63%.1 In addition, nearly 60% of the trialists were unaware of the CONSORT statement, which has been shown to positively influence the manner in which randomized control trials were conducted.14 Our study found that half of the included journals did not mention the CONSORT statement or any other reporting guideline. Failure to adopt reporting guidelines in cardiothoracic surgery journals may continue to result in poorly reported, or worse, poorly conducted research, which may give these studies unwarranted credibility.1,15–17,20 As stated by Zonta and De Martino, “Poorly conducted trials are a waste of time, effort, and money. The most dangerous risk associated with poor-quality reporting is an overestimate of the advantages of a given treatment. This could lead to the adoption of policies based off of unreliable evidence that directly harms patient care.”17 Study information in surgical specialties like cardiothoracic surgery should be carefully reported to allow readers to understand any potential bias and allow for honest interpretations of findings.17

Previous research has found that the use of reporting guidelines improves the completeness of study reporting. Agha et al. found that changes in the International Journal of Surgery’s policy on reporting guidelines resulted in an increase in the adherence to CONSORT by 50–70%, PRISMA by 48–76%, and STROBE by 12%.3 As of 2014, 28 journals from physical medicine and rehabilitation have formed a collaboration to improve research reporting.21 This collaboration requires that both authors and peer reviewers make use of reporting guidelines when writing and reviewing research studies. It would be reasonable for cardiothoracic surgery journal editors to consider forming such a collaboration, especially if evidence suggests that such collaborations improve the quality of research reporting.

Study registration requirements by journals were also examined in our study. Medical journal editors believe that prospective clinical trial registration is the single most important tool to ensure unbiased reporting, since this practice allows for the identification of potential outcome reporting bias and or other deviations from the study protocol.22 In 2007, clinical trial registration became a requirement for investigators in accordance with United States law.23 The Food and Drug Administration Amendments Act requires all phase 2–4 trials involving FDA-approved drugs, devices, or biologics be registered on ClinicalTrials.gov before beginning the study.23 Mathieu found that less than 50% of all studies were prospectively registered, and 25% of studies lacked registration.24 Overall, trial registration rates vary between specialties, with many published randomized trials lacking prospective registration.10,25–29 In cardiothoracic surgery, Wiebe et al. found that nearly 60% of clinical trials were not prospectively registered.10 The listed pre-specified outcomes of the prospectively registered trials often conflicted with those in the published report, and outcome discrepancies were found in nearly 50% of the evaluated trials. Wiebe et al. states that “these conflicts could be the result of outcome reporting bias if the upgrade or downgrade of an outcome favors statistical significance.”10 Our study found that almost 50% of the included journals made no mention of prospective trial registration, leaving cardiothoracic surgery journals open to biased reporting of results. Improving the poor compliance of prospective registration in cardiothoracic surgery is contingent on further development and adherence to policies requiring registration of a trial before journal submission.

Although we attempted to contact journal editors to inquire about the types of articles they accepted, some never responded to our inquiry. Thus, when the instructions for authors were unclear of which studies were accepted by their journal, we may have inadvertently listed a journal as not mentioning a reporting guideline for an article type that the journal did not accept.

Follow up studies evaluating the influence of reporting guidelines on research reporting may be warranted. For example, researchers could evaluate adherence to CONSORT criteria in journals requiring or recommending CONSORT and compare these results to CONSORT adherence in journals without policies regarding CONSORT. Evaluation should be performed before and after development of reporting guidelines or a trial registry policy to examine changes in adherence following the publication of these policies.

Nearly half of all cardiothoracic surgery journals made no mention of reporting guidelines or trial registration policies. This could place trials in cardiothoracic surgery at risk of methodological flaws or bias. We recommend cardiothoracic surgery journals without reporting guidelines or trial registration policies begin recommending and slowly implementing these policies.

1. Tiruvoipati R, Balasubramanian SP, Atturu G, Peek GJ, Elbourne D. Improving the quality of reporting randomized controlled trials in cardiothoracic surgery: the way forward. J Thorac Cardiovasc Surg. 2006; 132(2): 233–40.

2. Simera I, Moher D, Hirst A, Hoey J, Schulz KF, et al. Transparent and accurate reporting increases reliability, utility, and impact of your research: reporting guidelines and the EQUATOR Network. BMC Med. 2010; 8: 24.

3. Agha RA, Fowler AJ, Limb C, Whitehurst K, Coe R, et al. Impact of the mandatory implementation of reporting guidelines on reporting quality in a surgical journal: A before and after study. Int J Surg. 2016; 30: 169–72.

4. Bonati M, Pandolfini C. Trial registration, the ICMJE statement, and paediatric journals. Arch Dis Child. 2006; 91(1): 93.

5. Rivara FP. Registration of Clinical Trials. Arch Pediatr Adolesc Med. 2005; 159(7): 685.

6. Deangelis CD, Drazen JM, Frizelle FA, Haug C, Hoey J, et al. Is this clinical trial fully registered? A statement from the International Committee of Medical Journal Editors. JAMA. 2005; 293(23): 2927–9.

7. Wood SF, Perosino KL. Increasing transparency at the FDA: the impact of the FDA Amendments Act of 2007. Public Health Rep. 2008; 123(4): 527–30.

8. United Nations Secretary-General’s High-Level Panel on Access to Medicine. Report: Promoting Innovation and Access to Health Technologies. New York, NY: United Nations; 2016.

9. Booth A, Clarke M, Dooley G, Ghersi D, Moher D, et al. The nuts and bolts of PROSPERO: an international prospective register of systematic reviews. Syst Rev. 2012; 1: 2.

10. Wiebe J, Detten G, Scheckel C, Gearhart D, Wheeler D, et al. The heart of the matter: Outcome reporting bias and registration status in cardio-thoracic surgery. Int J Cardiol. 2017; 227: 299–304.

11. Chan A-W, Hróbjartsson A, Haahr MT, Gøtzsche PC, Altman DG. Empirical evidence for selective reporting of outcomes in randomized trials: comparison of protocols to published articles. JAMA. 2004; 291(20): 2457–65.

12. Lang TA, Altman DG. Basic statistical reporting for articles published in biomedical journals: the SAMPL Guidelines. In: Smart P, Maisonneuve H, Polderman A, eds. Science Editors’ Handbook, 2nd ed. Split: European Association of Science Editors; 2013: 23–6.

13. Dillman DA, Smyth JD, Christian LM. Internet, Phone, Mail, and Mixed-Mode Surveys: The Tailored Design Method, 4th ed. Hoboken, NJ: John Wiley; 2014.

14. Meerpohl JJ, Wolff RF, Niemeyer CM, Antes G, von Elm E. Editorial policies of pediatric journals: survey of instructions for authors. Arch Pediatr Adolesc Med. 2010; 164(3): 268

15. Moher D, Altman D, Schulz K, Simera I, Wager E. Guidelines for Reporting Health Research: A User’s Manual. Chichester, UK: Wiley Blackwell; 2014.

16. Moher D, Schulz KF, Altman DG, CONSORT. The CONSORT statement: revised recommendations for improving the quality of reports of parallel group randomized trials. BMC Med Res Methodol. 2001; 1: 2.

17. Zonta S, De Martino M. Standard requirements for randomized controlled trials in surgery. Surgery. 2008; 144(5): 838–9.

18. Smidt N, Rutjes AWS, van der Windt DAWM, Ostelo RW, Bossuyt PM, et al. The quality of diagnostic accuracy studies since the STARD statement: has it improved? Neurology. 2006; 67(5): 792–7.

19. Plint AC, Moher D, Morrison A, Schulz K, Altman DG, et al. Does the CONSORT checklist improve the quality of reports of randomised controlled trials? A systematic review. Med J Aust. 2006; 185(5): 263–7.

20. McCulloch P, Taylor I, Sasako M, Lovett B, Griffin D. Randomised trials in surgery: problems and possible solutions. BMJ. 2002; 324(7351): 1448–51.

21. EQUATOR Network. Collaborative initiative involving 28 rehabilitation and disability journals. Equator Network. 2016. http://www.equator-network.org/2014/04/09/collaborative-initiative-involving-28-rehabilitation-and-disability-journals (accessed May 18, 2017).

22. Weber WE, Merino JG, Loder E. Trial registration 10 years on. BMJ. 2015; 351: h3572.

23. United States Food and Drug Administration Amendments Act (FDAAA) of 2007 (Public Law 110-85), amending the Federal Food, Drug, and Cosmetic Act, 21 U.S.C. §301.

24. Mathieu S, Boutron I, Moher D, Altman DG, Ravaud P. Comparison of registered and published primary outcomes in randomized controlled trials. JAMA. 2009; 302(9): 977–84.

25. van de Wetering FT, Scholten RJPM, Haring T, Clarke M, Hooft L. Trial registration numbers are underreported in biomedical publications. PLoS One. 2012; 7(11): e49599.

26. Hardt JLS, Metzendorf M-I, Meerpohl JJ. Surgical trials and trial registers: a cross-sectional study of randomized controlled trials published in journals requiring trial registration in the author instructions. Trials. 2013; 14: 407.

27. Killeen S, Sourallous P, Hunter IA, Hartley JE, Grady HLO. Registration Rates, Adequacy of Registration, and a Comparison of Registered and Published Primary Outcomes in Randomized Controlled Trials Published in Surgery Journals. Ann Surg. 2014; 259(1): 193.

28. Anand V, Scales DC, Parshuram CS, Kavanagh BP. Registration and design alterations of clinical trials in critical care: a cross-sectional observational study. Intensive Care Med. 2014; 40(5): 700–22.

29. Hooft L, Korevaar DA, Molenaar N, Bossuyt PMM, Scholten RJPM. Endorsement of ICMJE’s Clinical Trial Registration Policy: a survey among journal editors. Neth J Med. 2014; 72(7): 349-5.